Background

Head and neck cancer (HNC) is the sixth most common type of cancer worldwide, according to the 2022 cancer statistics [1]. This cancer type includes all malignancies that occur in the oral cavity, the larynx and the pharynx [2,3].

The treatment of HNC patients frequently leads to permanent changes to their physiology and anatomy, often paired with permanent loss of swallowing and speech functions. The extreme patient burden that HNC patients endure severely affects their quality of life [4].

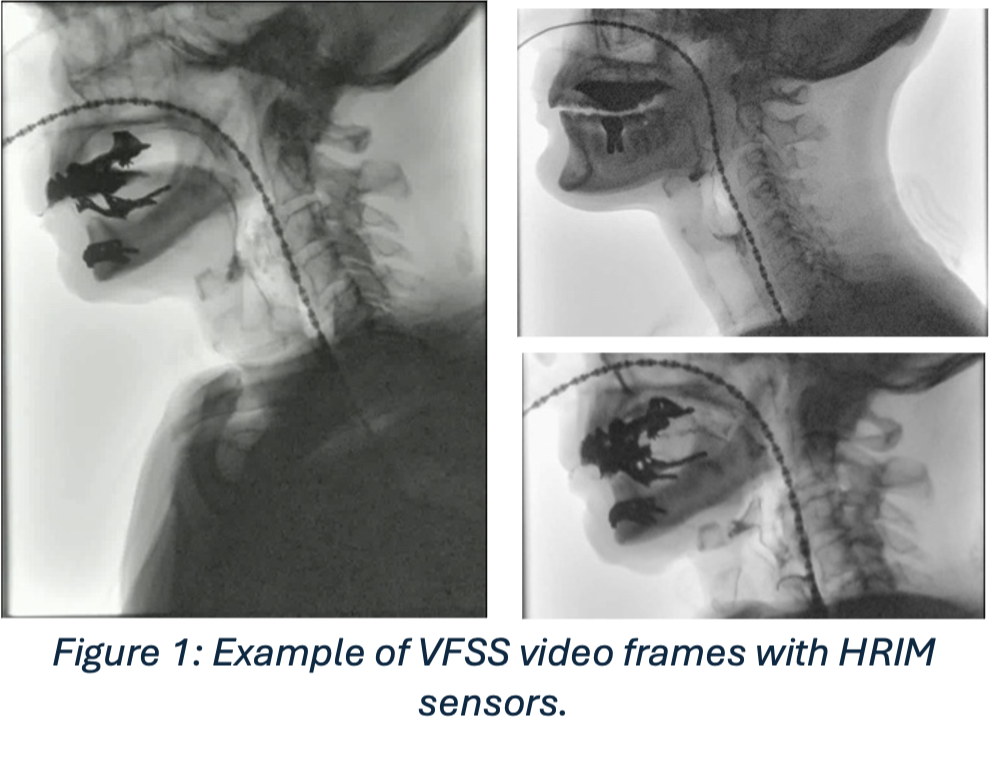

The combination of video-fluoroscopic swallow studies (VFSS) with high resolution impedance manometry promises to deliver a more accurate and comprehensive assessment of the swallowing function of head and neck cancer patients.

Aim

Together with clinicians from the Netherlands Cancer Institute, we aim to register VFSS and HRIM to improve the accuracy oropharyngeal dysphasia diagnosis in HNC patients.

In this assignment, we aim to develop a methodology that allows the clinicians to draw the manometric regions in a GUI and that it uses keypoints to ensure robustness against patient motion and occlusions. The GUI shall be prepared to receive backend algorithms developed in the continuation of this project.

What we offer

- More than 100 swallow videos from real patients

- More than 3500 annotated video-frames

- A working framework for easy data manipulation and integration

- Backend algorithms: deep learning manometer segmentation, deep-learning sensor detection, constrained Kalman-filter tracking.