Background:

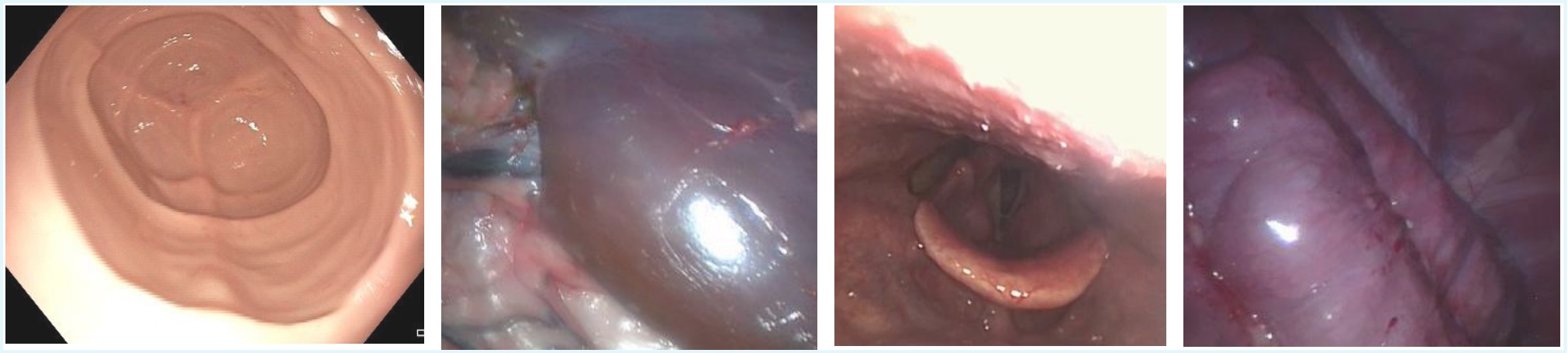

Minimally invasive surgery (MIS) employs laparoscopic and endoscopic systems to achieve reduced patient morbidity, accelerated convalescence, and lower perioperative complication rates [1]. However, laparoscopic/endoscopic imagery is frequently compromised by non-Lambertian specularities that are intense, direction-sensitive reflections arising from proximal coaxial illumination, hydrated smooth mucosal surfaces, and limited textural variation [2]. These highlights saturate sensor pixels, occluding subsurface anatomical structures and introducing high-frequency artifacts that perturb both surgeon perception and automated image-analysis pipelines [3,4].

Clinically, such photometric distortions distract operator focus and degrade the accuracy of instrument localization. From a computational perspective, specular occlusions undermine the performance of segmentation networks, feature-tracking algorithms, and monocular depth estimators. Moreover, analogous artifacts impair colposcopic lesion delineation, reduce polyp-detection sensitivity in colonoscopy, and compromise laryngeal tissue classification [5]. Therefore, to enable reliable learning-based monocular depth reconstruction with temporal coherence, it is imperative to develop robust specularity detection and removal techniques that preserve genuine chromatic and structural tissue information.

Aim:

- Use explainable AI techniques to detect and highlight non-Lambertian specular reflections in surgical images by generating adaptive binary masks that are easy to interpret across varying scenes.

- Test and compare ways to recover the appearance of tissues that were covered or distorted by glare, while keeping the color and texture realistic.

- Combine the cleaned image sequences into a depth estimation pipeline and ensure that the predicted depth remains stable from frame to frame.

- Build a set of evaluation tools, both visual and numerical, to show how glare removal helps in seeing depth more clearly and accurately in MIS procedures.

What we offer:

- Video dataset with 25 anonymized endoscopic video clips (variable lengths, 30 fps) as well as laparoscopy video clips.

- Starter PyTorch/TensorFlow codebase.

- Expert guidance in leveraging Explainable AI.

What we look for:

A student

- Comfortable with writing and debugging code in Python, having familiarity with PyTorch/TensorFlow or eagerness to adopt it.

- Having an interest in convolutional neural networks, image filtering, and saliency concepts.

- Initiative to troubleshoot challenges independently and drive the project forward.

If you're a student and are interested in finding out more about this assignment, please send an email to m.khan@utwente.nl or m.m.rocha@utwente.nl

References:

[1] Luo, X.; Mori, K.; Peters, T.M. Advanced endoscopic navigation: Surgical big data, methodology, and applications. Annu. Rev. Biomed. Eng. 2018, 20, 221–251.

[2] Fengze, L.; et al. A comprehensive survey of specularity detection: state-of-the-art techniques and breakthroughs, Artificial Intelligence Review 2025, 58, 1-53.

[3] Sdiri, B.; Beghdadi, A.; Cheikh, F.A.; Pedersen, M.; Elle, O.J. An adaptive contrast enhancement method for stereo endoscopic images combining binocular just noticeable difference model and depth information. Electron. Imaging 2016, 28, 1–7.

[4] Artusi, A.; Banterle, F.; Chetverikov, D. A Survey of Specularity Removal Methods. Comput. Graph. Forum 2011, 30, 2208–2230.

[5] Kacmaz, R.N.; Yilmaz, B.; Aydin, Z. Effect of interpolation on specular reflections in texture-based automatic colonic polyp detection. Int. J. Imaging Syst. Technol. 2021, 31, 327–335.